Where accessibility shines in iOS 14

With the latest update to iOS14 we weren’t disappointed with the list of accessibility enhancements allowing everyone to capitalize on the OS features.

Team Stark

Sep 24, 2020

So did anyone else nerd out over accessibility features in iOS14? Because we did. As a team working on accessibility tools, we’re unashamed to say that we eagerly watch keynotes to determine where the new Operating System is enhancing its accessibility features. And with the latest update we weren’t disappointed.

Believe it or not, Apple introduced its first accessibility feature with the iPhone 3G! Yep, that’s right, iOS3—where it added VoiceOver support. And while Apple may not have been the first phone ever with accessibility features, it was certainly the first easy-to-use accessibility friendly device adopted by the blind and the hearing-impaired community. And today, Apple is embraced not just by the deaf community, but other individuals with a spectrum of temporary, situational, and permanent disabilities. This is because Apple doesn’t treat accessibility as a nice-to-have but has deeply integrated assistive technologies into its products and thus ensures the products can be used by everyone.

With that said, we figured we’d put together a list of enhancements that stood out most in their ability to allow everyone to benefit.

FaceTime Sign Language Support

It’s hard not to make this the favorite, so instead we made it the first in the post since we love all the enhancements. Sign language prominence is a major iteration of FaceTime’s capabilities and a major win for those in the ASL community along with the number of other supportive assistive features for deaf users. FaceTime can now detect when a participant is using sign language and make the person prominent in a Group FaceTime call in the same way someone speaking with voice generally would.

Siri

Siri has taken a lot of backlash in the past, but the Apple AI is put on overdrive in iOS 14, enhancing apps accessibility for everyone, by default. From opening and referencing multiple pieces of content at once to aiding in communication—which are all easier than ever. Siri does what any good design is supposed to do by surfacing the right information at the right time when it’s needed.

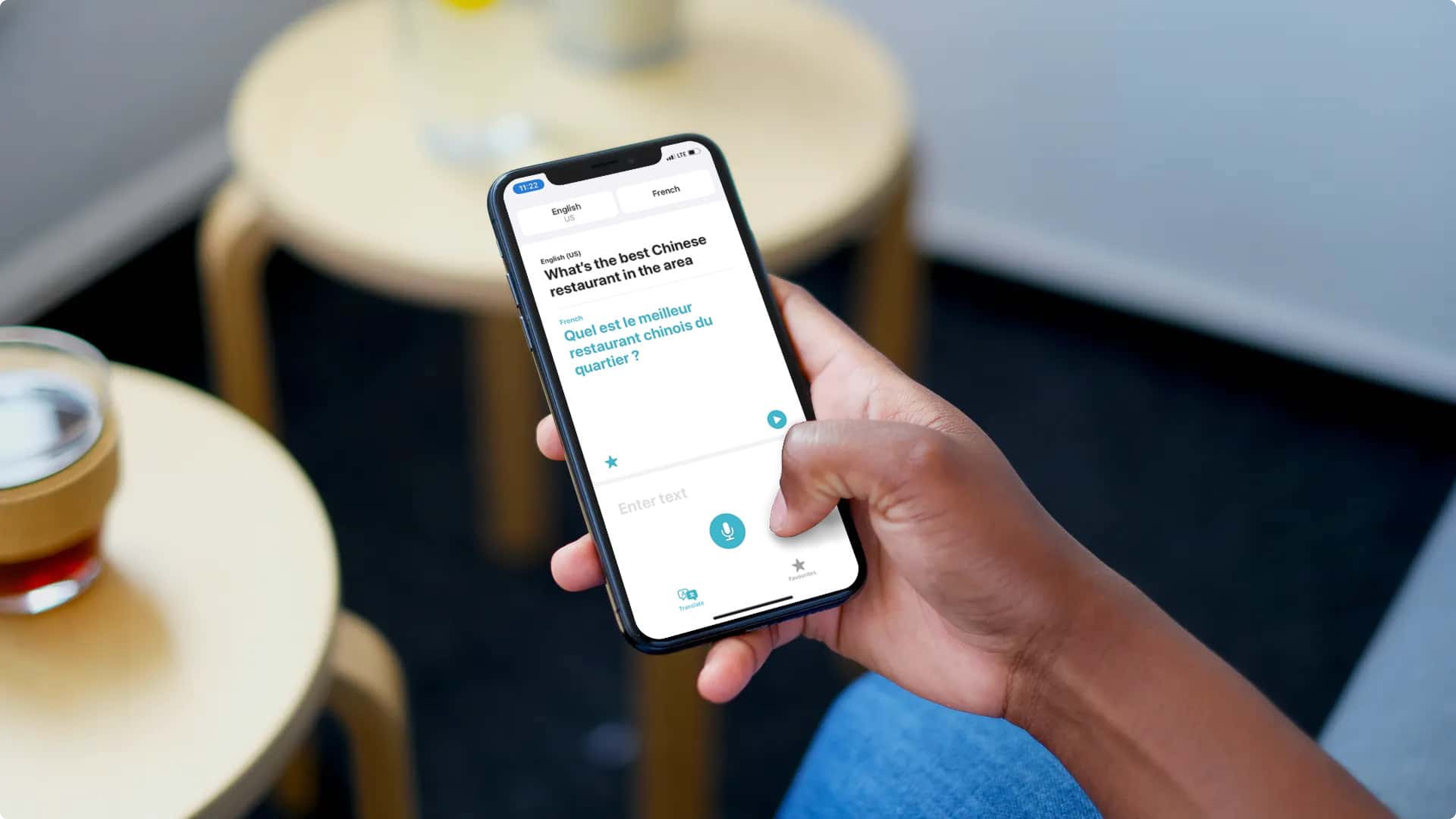

Translate

Translate app is beautiful. Sure, Google has had one (we know, Android fans), but it’s clear that Apple (as usual) paid attention to how it can be improved for its own customers. Bringing a native Localization app is major for accessibility. No more language barrier to access education, finance, health, etc!

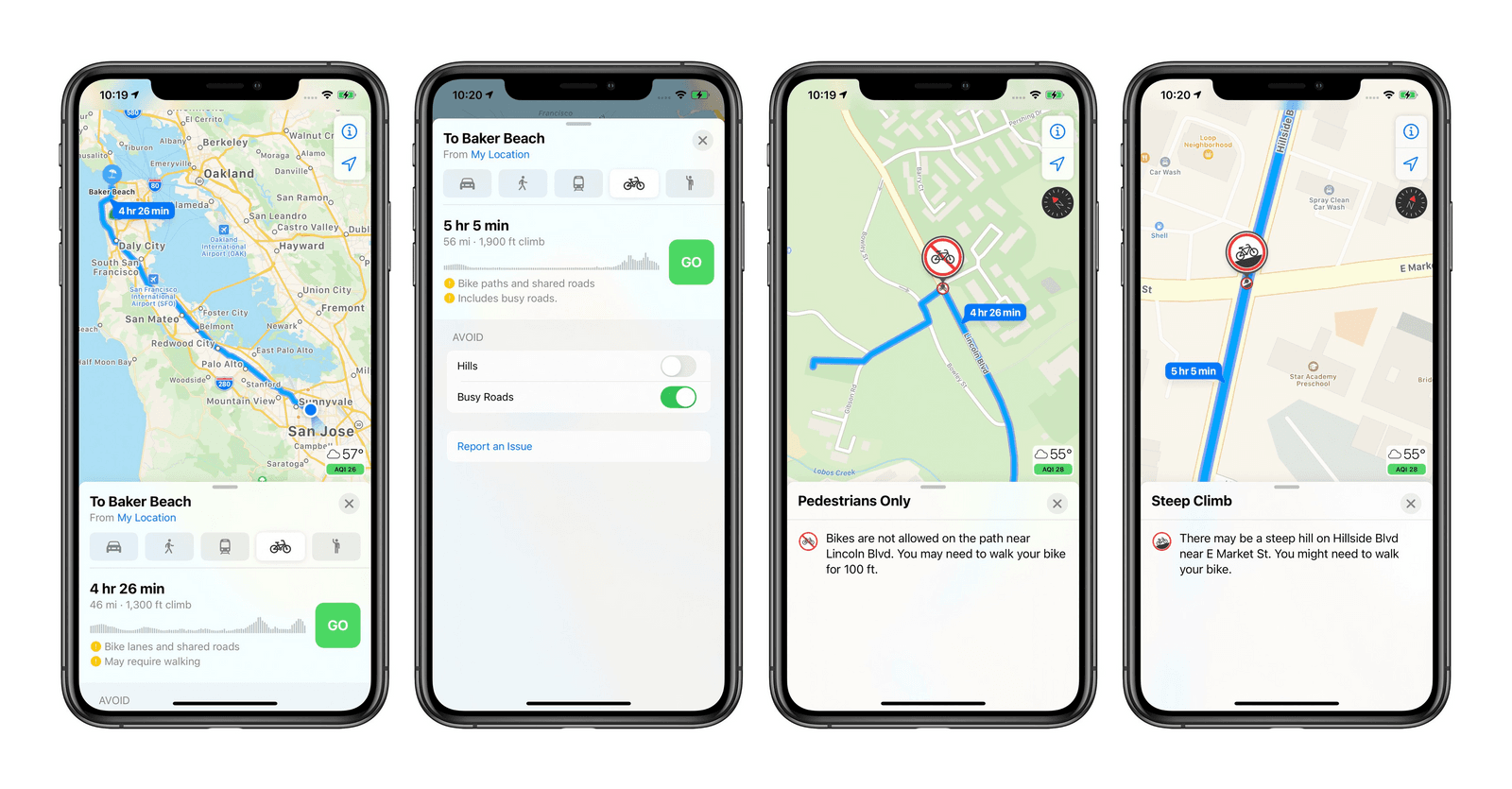

Maps

The update to Apple Maps now informs you when and where there are steep hills and stairs ahead. Having the option to avoid them is great for those that have difficulty walking, bikers, and most importantly, a necessity for those using a wheelchair, crutches, or any other mobility assistance.

Often, streets and corporate buildings (especially when older) don’t make clear ahead of time that there are no elevators to assist individuals. This baked in accessibility boost helps everyone navigate—on and ahead of time.

Notes

Using the Apple Pencil for Scribble in Notes app is whoa! The ability to hand write notes in multiple languages (from English to Chinese characters) and have Apple translate to your default system language type text is incredible. It’ll be exciting to see how folks use this to better navigate presentations and note-taking in academia. What a boost and win for collaboration without language barriers.

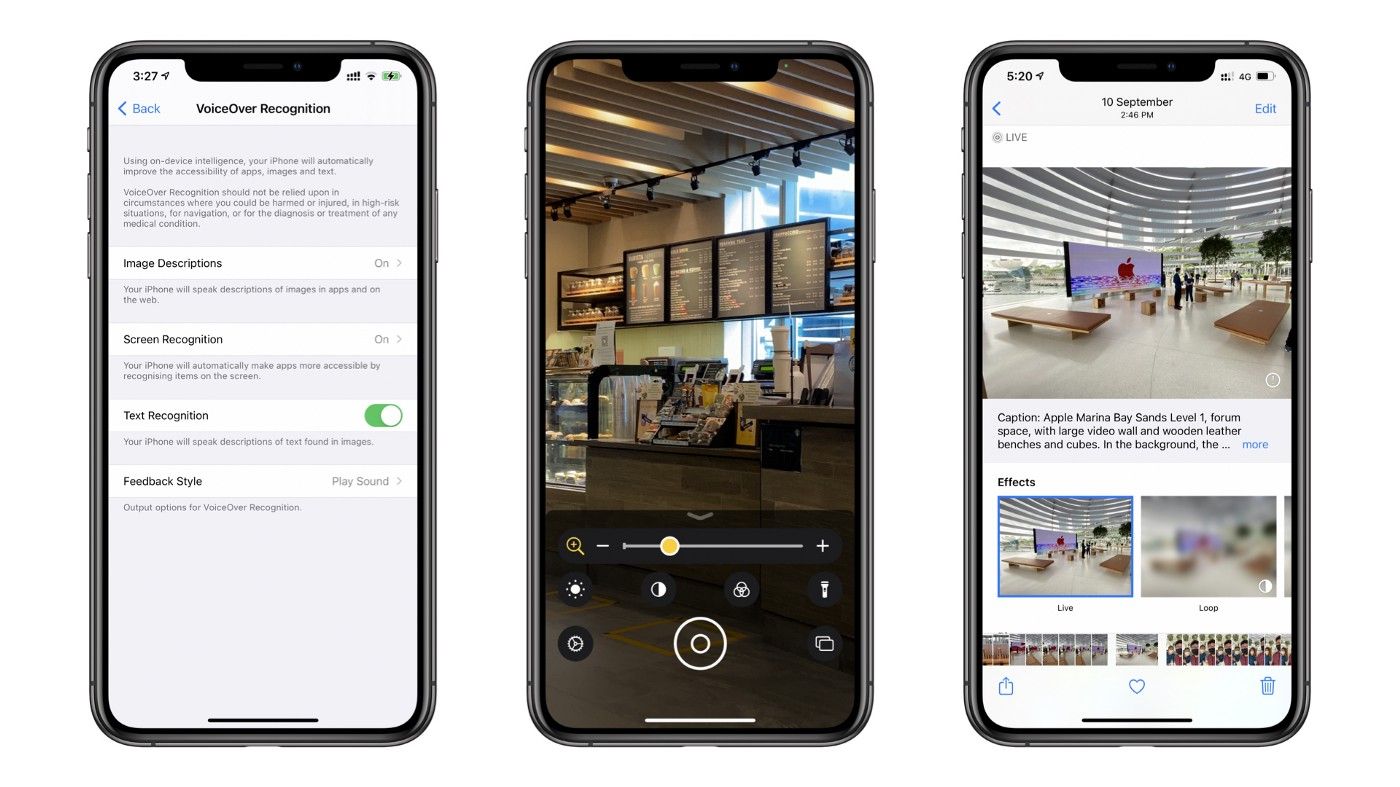

VoiceOver

VoiceOver is iOS specific technology that recognizes key elements displayed on your screen and adds VoiceOver support for app and web experiences that don’t have accessibility support built in. This is super useful and necessary for individuals with a visual and/or physical disability.

With VoiceOver comes Image, Text, and Screen Recognition. Here’s a breakdown of each:

- VoiceOver Recognition: Image descriptions reads complete-sentence descriptions of images and photos within apps and on the web.

- VoiceOver Recognition: Text recognition speaks the text it identifies within images and photos.

- VoiceOver Recognition: Screen recognition automatically detects interface controls to aid in navigating your apps, making them more accessible.

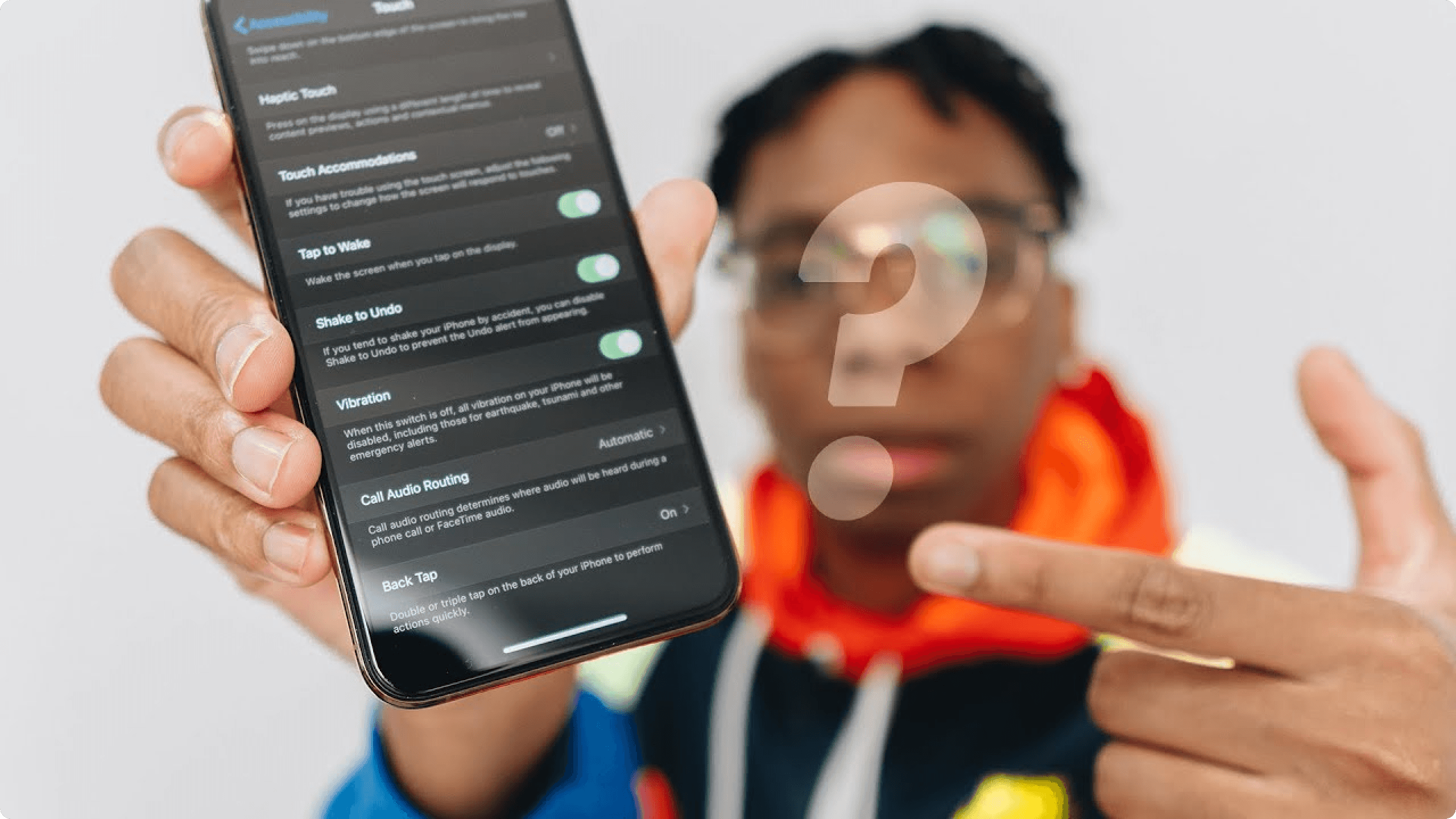

Back Tap

With the new Back Tap feature, you can create easy ways to trigger convenient and frequently used tasks—be it starting VoiceOver or taking a screenshot. Super convenient when needing to open accessibility specific features. Using the screenshot as an example, this is something you’d have to otherwise do with two hands and/or a strong grip. We’d love to see the data which states how many iPhones cracked in the making of said screenshots plus how many MB of space do extra accidental screenshots take up?

You can find the feature by going to Settings > Accessibility > Touch > Back Tap. Want to see it in action? Check out this awesome video from Shevon Salmon.

Though there are a ton of hidden accessibility gems baked into the operating system itself, these are the enhancements in iOS14 that stood out most and were worth sharing. It’s amazing to see how baking accessibility into the core of the product completely paves the way for the apps, services, and capabilities that follow suit as they continually build upon it.

What accessibility features were you most impressed and excited to see in iOS14?

To stay up to date with the latest features and news, sign up for our newsletter.

Want to join a community of other designers, developers, and product managers to share, learn, and talk shop around all things accessibility? Join our Slack community, and follow us on Twitter and Instagram.